Table of Contents

Getting started with MaaS

MAAS

Metal as a Service

Metal as a Service (MAAS) brings the language of the cloud to physical servers. It makes it easy to set up the hardware on which to deploy any service that needs to scale up and down dynamically; a cloud being just one example.

With a simple web interface, you can add, commission, update, decommission and recycle your servers at will. As your needs change, you can respond rapidly, by adding new nodes and dynamically re-deploying them between services. When the time comes, nodes can be retired for use outside the MAAS.

MAAS works closely with the service orchestration tool Juju to make deploying services fast, reliable, repeatable and scalable. more: https://maas.ubuntu.com/

Note: We are going to utilize MAAS as a part of POD jump-start server so that OS deployment can be handled by MAAS and support Ubuntu, Centos, Windows or customized images (supported by MAAS process).

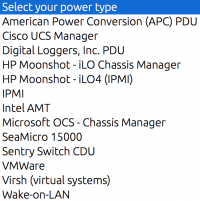

Currently MAAS supports all Major OEM (HP, Dell, Intel, Cisco, Sea-micro etc.. ) hardware which includes the power management of those hardware through IPMI as well as other power management software.

Video: Managing OPNFV Labs with MAAS Hz729WSEP0Q?.swf

http://www.adobe.com/go/getflashplayer/

MAAS machine requirement:

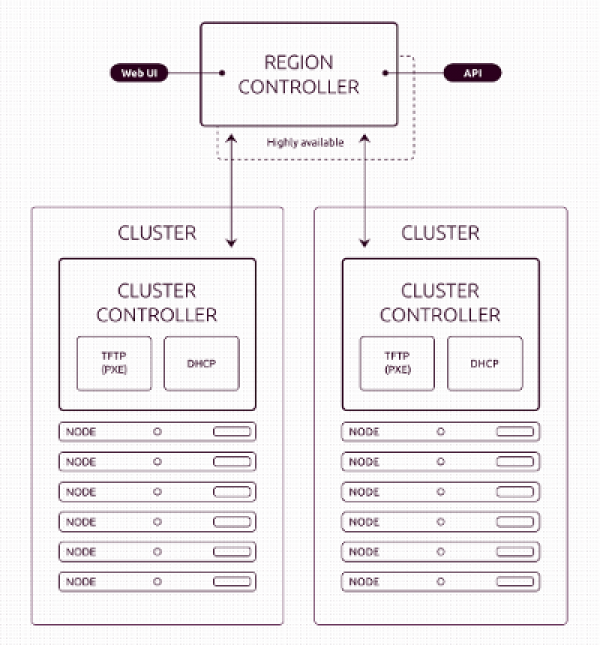

MAAS regional controller (each lab) :

- MAAS regional controller expect the same hardware requirement as cluster controller.

- Ubuntu 14.04 LTS is preferred with maas-regional-controller package installed and configured on the system.

- MAAS cluster controller talks to regional controller through rpc and API. We expect regional and cluster controller on public network to communicate between MAAS regional controller and MAAS cluster controller.

MAAS Cluster controller (per pod):

- One intel x86_64 machine as cluster controller in each lab which will have MAAS cluster controller installed and configure to control the lab head node.

- Hardware should have 16 core processor and 32 GB RAM for smooth operations. So that mutiple VMs also can be installed on the MAAS cluster controller for further deployment.

- Should have Ubuntu 14.04 LTS with MAAS cluster controller installed.

- Machines needs to be provisioned with MAAS should have first network (eth0) as admin network and connected to MAAS via switch.

- All provisioned server needs to have eth0 setup as PXE boot first then hard disk and other device.

More information on how to register a cluster controller to regional controller can be found at https://maas.ubuntu.com/docs/development/cluster-registration.html https://maas.ubuntu.com/docs/development/cluster-bootstrap.html

Installation instruction

Region Controller

- install ubuntu 14.04

- configure interfaces

- configure dns

- sudo apt-add-repository ppa:maas/stable

- apt update

- apt upgrade

- apt install -y maas-region-controller

- dpkg-reconfigure maas-region-controller

- create new root account

- login

Cluster Controller

- install ubuntu 14.04

- configure interfaces

- configure dns

- sudo apt-add-repository ppa:maas/stable

- apt update

- apt upgrade

- apt install maas-cluster-controller

- dpkg-reconfigure maas-cluster-controller

Reference Links:

- https://wiki.opnfv.org/pharos/pharos_specification ←- recommendations for networks and hardware

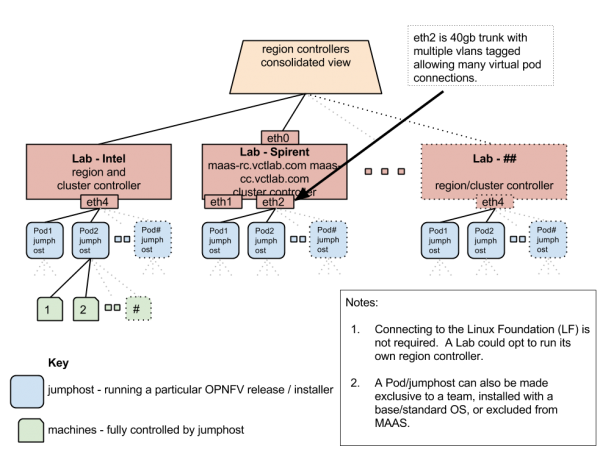

Below Example updated for cluster of Hypervisors with Virtual Pods using VLANs. In this example there is 1 lab with 5 virtual pods.

The following items will be needed to add a MaaS cluster to the existing OPNFV MaaS Regional Controller:

MaaS Networks NOTE: It's recommended to include the 4 digit VLAN id in the network name if 802.1q vlans are used. This is just an example.

- 1g - Shared Lab External Network for Cluster Controller (LabExt) - typically connected to Firewall WAN port

- 1g - Shared Lab Internal Management Network for Lights Out, Cluster Controller, Jumpboxes, UPS, PDU, etc… (LabMGMT) - connect to drac, ilo, ipmi, and eth0 native access port

- 1g - 5 x Isolated Pod Lights Out Network VLANs connect to eth1 as a trunk - for virtual pod we can build VMs here.

- 1g - 5 x Isolated Pod Public Network VLANs connect to eth1 as a trunk

- 1g - 5 x Isolated Pod Admin Network VLANs connect to eth1 as a trunk

- 10g - 10 x Pod Private Network VLANs for Compute VMs - connect to eth2 as a trunk

- 10g - 10 x Pod Private Network VLANs for Compute VMs - connect to eth3 as a trunk

MaaS Cluster Controller

- Ubuntu 14.04 LTS 64 bit machine - can be physical or virtual with public and private mgmt network interfaces

- public interface should be reachable from the internet or via vpn from regional controller machine

- NAT can also been used - Narinder set this up with Intel. Possibly using a port mapping to port 443???

- create a user account for the services to run under. Recommended to call it "ubuntu" with both password and ssh key. We will need a secure method to exchange these keys with GPG signing and https://launchpad.net

Pods

- A cluster controller can manage one or more pods

- A pod will need one jumphost machine with it's NIC1 on the mgmt private network and a second NIC2 interface for the compute nodes to be setup on the POD

- The jumphost will be PXEBOOT DHCP and TFTP server on NIC2 for all the compute nodes of its pod.

MaaS JumpHost

- the cluster controller will install and configure the OS on a jumphost on the private mgmt network. This jumphost must be able to reach the internet. The cluster controller can be setup as a gateway to the internet - or - an external gateway provided by the lab may also be used.

- The cluster controller will be the DHCP and TFTP PXEBOOT server for the jumphosts.

Compute Nodes

- A pod consists of a Jumphost, some control nodes, and some compute nodes

- There is a minimum set of hardware resources required to run all the OPNFV services. Be sure the system(s) where you want to run all the needed services (OpenStack, SDN Controller, KVM host, DUT VNFs, and Test Machines)

- It's possible to have an all-in-one configuration where MaaS can deploy an entire environment in a single machine.

- Recommended Pod configuration 5 machines (this is in addition to the Pod Jumphost, the Lab cluster controller, and the Master OPNFV regional controller, jenkins servers, etc.)

- Consult the pharos spec for hardware recommendations. It's possible to use either physical or virtual machines

- MaaS uses "power drivers" to support IPMI, VMware, UCS, etc: http://maas.ubuntu.com/docs/api.html see drawing below.

Proposed MAAS Poc

STATUS: the MaaS Proof of Concept is complete - now moving status to Pilot Project with expanded roll out.

Labs involved with POC: (Don't add to this list - just update and correct it. The POC is over.)

- Orange Pod 2 - https://wiki.opnfv.org/opnfv-orange

- Intel Pod 6 - https://wiki.opnfv.org/get_started/intel_hosting

MaaS Pilot

STATUS: the MaaS Pilot Project expands on the POC. POC was completed in November 2015 before the OPNFV Summit. It was agreed to bring in more labs and refine the scope for MaaS within OPNFV labs. There are two main objectives for MaaS:

- Obtain centralized birds eye dashboard view of all lab resources (bare metal and virtual server hardware) managed by MaaS

- MaaS controllers in each lab will receive requests from Jenkins jobs allowing jump hosts to be provisioned for the installer and SDN controller needed for specific developers or testers

MaaS Pilot Labs

Labs involved with Pilot: (Please update this list with your interest to participate in the Pilot Project)

- Orange Pod 2 - https://wiki.opnfv.org/opnfv-orange - working to resolve a configuration issue and reinstall

- Intel Pod 5 - https://wiki.opnfv.org/get_started/intel_hosting - eta dec 15 DavidB@Orange

- Intel Pod 6 - https://wiki.opnfv.org/get_started/intel_hosting - eta dec 15 DavidB@Orange

- Spirent - https://wiki.opnfv.org/pharos/spirentvctlab -

- Dell Pod 2 - https://wiki.opnfv.org/dell_hosting - TPM Mark Wenning @canonical and Vikram @Dell - too busy

- Juniper Pod 1 - Iben is working with Samantha Jian-Pielak samantha.jian-pielak@canonical.com - network issue

- Juniper Pod 2 - TBD

- CENGN - TBD - Nicolas@Canonical - working on a plan

- UTSA - TBD 2016

- Huawei - TBD Prakash to inform us on status - no status - working on JOID vitual setup for ONOS Charm checked into JOID - needs documentation

- Ravello - OPNFV Academy for new B release users to evaluate and learn

- Other Pods coming - please add your info to this list

Matrix

There are 16 combinations of installers and sdn controllers being considered for OPNFV Release 2. These are reflected in this matrix:

| Installer/SDN | OVN | ODL Helium | ODL Lithium | Contrail | ONOS |

| Apex | OVN | Complete | WIP | WIP | ? |

| Compass | OVN | Complete | ? | ? | ? |

| Fuel | OVN | Complete | ? | WIP | ? |

| JOID | OVN | Complete | WIP | Complete | WIP |

Above Diagram represent the End state diagram of different labs. Basic idea is to use the community lab to deploy different installer repeatedly and reliably. Which will increase the usage of individual community lab and will be true integration lab with Linux Foundation lab.

Network Requirements

- MAAS regional controller will have connectivity to individual community lab where deployment will take place.

- Each community data centre expected to have cluster controller on interface (say eth4) which is connected to different pods jump hosts within data centre at eth4 only.

- Jump hosts and other deployment nodes needs to configure the PXE boot first with eth0 first then eth4 in sequence then to hard disk.

- Cluster controller will run DHCP/DNS/TFTP/PXE on eth4 interface network to control the jump host.

MaaS Workflow Idea

- jenkins server kicks off maas workflow job to initiate pod build out in a lab

- maas regional controller receives job and sends task to cluster controller for the lab

- The cluster controller builds the jump box for OPNFV installer of choice: Fuel, Foreman, RDO, APEX, JOID, COMPASS, etc…

- The jumpbox builds out an OPNFV for the compute nodes of the pod with correct OS and SDN controller: Ubuntu, CentOS, Daylight, Contrail, Midonet, etc

- FUNCTEST jobs run to validate the environment

- A quick QTIP benchmark is run to provide a performance score

- More in depth tests can be run as desired: vsperf, storage, yardstick, etc

- When testing is complete the servers are erased and the pod is rebuilt with the new parameters

**References**

MAAS POC Slides OCT 12, 2015 : maas.pdf

The original POC work on the original OPNFV Arno release (images and how to build the images) can be found here: http://people.canonical.com/~dduffey/files/OPNFV/