This is an old revision of the document!

Table of Contents

Doctor

Use Cases

Before explaining the use cases for NFVI fault management and maintenance, it is necessary to understand current telecom node, e.g., 3GPP mobile core nodes (MME, S/P-GW etc.) deployments. Due to stringent High Availability (HA) requirements, these nodes often come in an Active-Standby (ACT-SBY) configuration which is a 1+1 redundancy scheme. ACT and SBY nodes (aka Physical Network Function (PNF) in ETSI NFV terminology) are in a hot standby configuration. If ACT node is unable to function properly due to fault or any other reason, the SBY node is instantly made ACT, and service could be provided without any interruption.

The ACT-SBY configuration needs to be maintained. This means, when a SBY node is made ACT, either the previously ACT node, after recovery, shall be made SBY, or, a new SBY node needs to be configured.

The NFVI fault management and maintenance requirements aim at realizing the same HA when the PNFs mentioned above are virtualized i.e. made VNFs, and put under the operation of Management and Orchestration (MANO) framework defined by ETSI NFV [refer to MANO GS].

There are three use cases to show typical requirements and solutions for automated fault management and maintenance in NFV. The use cases assume that the VNFs are in an ACT-SBY configuration.

- Auto Healing (Triggered by critical error)

- Recovery based on fault prediction (Preventing service stop by handling warnings)

- VM Retirement (Managing service while H/W maintenance)

1. Auto Healing

Auto healing is the process of switching to SBY when the ACT VNF is affected by a fault, and instantiating/configuring a new SBY for the new ACT VNF. Instantiating/configuring a new SBY is similar to instantiating a new VNF and therefor, is outside the scope of this project.

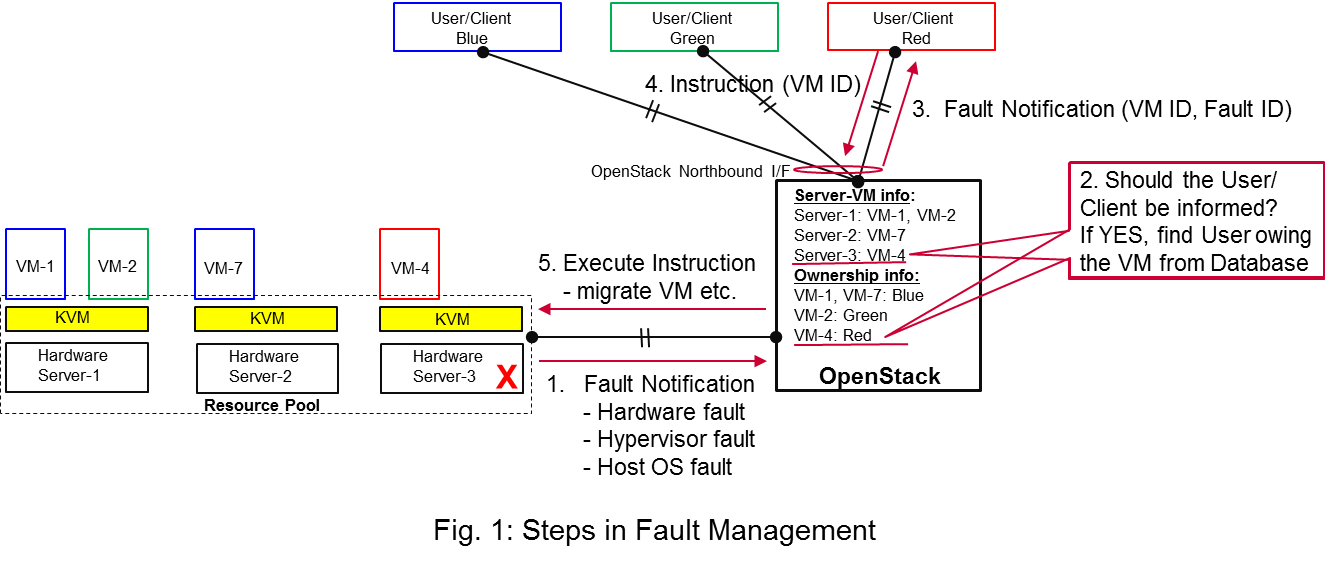

In Fig. 1, a system-wide view of relevant functional blocks is presented. OpenStack is considered as the VIM implementation which has interfaces with the Resource Pool (NFVI in ETSI NFV terminology) and Users/Clients. VNF implementation is represented as VMs with different colours. User/Clients (VNFM or NFVO in ETSI NFV terminology) own/manage the respective VMs shown with the same colours.

The first requirement over here is that OpenStack needs to detect faults (1. Fault Notification in Fig. 1) in the Resource Pool which affect the proper functioning of the VMs on top of it. Relevant fault items should be configurable. OpenStack itself could be extended to detect such faults. A third party fault monitoring element can also be used which then informs OpenStack about such faults. However, the third party fault monitoring element would also be a component of VIM from an architectural point of view.

Once such fault is detected, OpenStack shall find out which VMs are affected by this fault. In the example in Fig. 1, VM-4 is affected by a fault in Hardware Server-3. Such mapping shall be maintained in OpenStack e.g. shown as the Server-VM info table in OpenStack in Fig. 1.

Once OpenStack detects which VMs are affected, it then finds out who is the owner/manager of the affected VMs (Step 2 in Fig. 1). In Fig.1, through an Ownership info table, OpenStack knows that for the red VM-4, the manager is the red User/Client. OpenStack then notifies (3. Fault Notification in Fig. 1) red User/Client about this fault, preferably with sufficient abstraction rather than detailed physical fault information.

The User/Client then switches to its SBY configuration and makes the SBY VNF to ACT state. It further initiates a process to instantiate/configure a new SBY. However, switching to SBY and creating a new SBY is a VNFM/NFVO level operation and therefore, outside the scope of this project.

Once the User/Client has switched to SBY configuration, it notifies (Step 4 “Instruction” in Figure 1) OpenStack. OpenStack can then take necessary (e.g. pre-determined by the involved network operator) actions on how to clean up the fault affected VMs (Step 5 “Execute Instruction” in Figure 1).

The key issue in this use case is that a VIM (OpenStack in this context) shall not take a standalone fault recovery action (e.g. migration of the affected VMs) before the ACT-SBY switching is complete, as that might violate the ACT-SBY configuration and render the VNF out of service.

2. Recovery based on fault prediction

Fault management scenario explained in Clause 2.1.1 can also be performed based on fault prediction. In such cases, in VIM, there is an intelligent fault prediction module which, based on its NFVI monitoring information, can predict an eminent fault in the elements of NFVI. A simple example is raising temperature of a Hardware Server which might trigger a pre-emptive recovery action.

This use case is very similar to Auto healing in Clause 2.1.1. Instead of a fault detection (Step 1 “Fault Notification in” Figure 1), the trigger comes from a fault prediction module in OpenStack, or from a third party module which notifies OpenStack about an eminent fault. From Step 2~5, it is the same as "Auto healing" use case. In this case, the User/Client of a VM/VNF switches to SBY based on a predicted fault, rather than an occurred fault.

3. VM Retirement

All network operators perform maintenance of their network infrastructure, both regularly and irregularly. Besides the hardware, virtualization is expected to increase the number of elements subject to such maintenance as NFVI holds new elements like the hypervisor and host OS. Maintenance of a particular resource element e.g. hardware, hypervisor etc. may render a particular server hardware unusable until the maintenance procedure is complete.

However, User/Client of VMs needs to know that such resources WILL be unavailable because of NFVI maintenance. This is again to ensure that the ACT-SBY configuration is not violated. A stand-alone action (e.g. live migration) from VIM/OpenStack to empty a physical machine so that consequent maintenance procedure could be performed may not only violate the ACT-SBY configuration, but also have impact on real-time processing scenarios where dedicated resources to VMs are necessary and a pause in vCPU is not allowed. The User/Client is in a position who can perform the switch between ACT and SBY, or switch to an alternative VNF forwarding graph so the hardware servers hosting the ACT VMs can be emptied for the upcoming maintenance operation. Once the target hardware servers are emptied (i.e. no VM running on top), the VIM/OpenStack can mark them with an appropriate flag so that these servers are not considered for VM hosting until these are flagged.

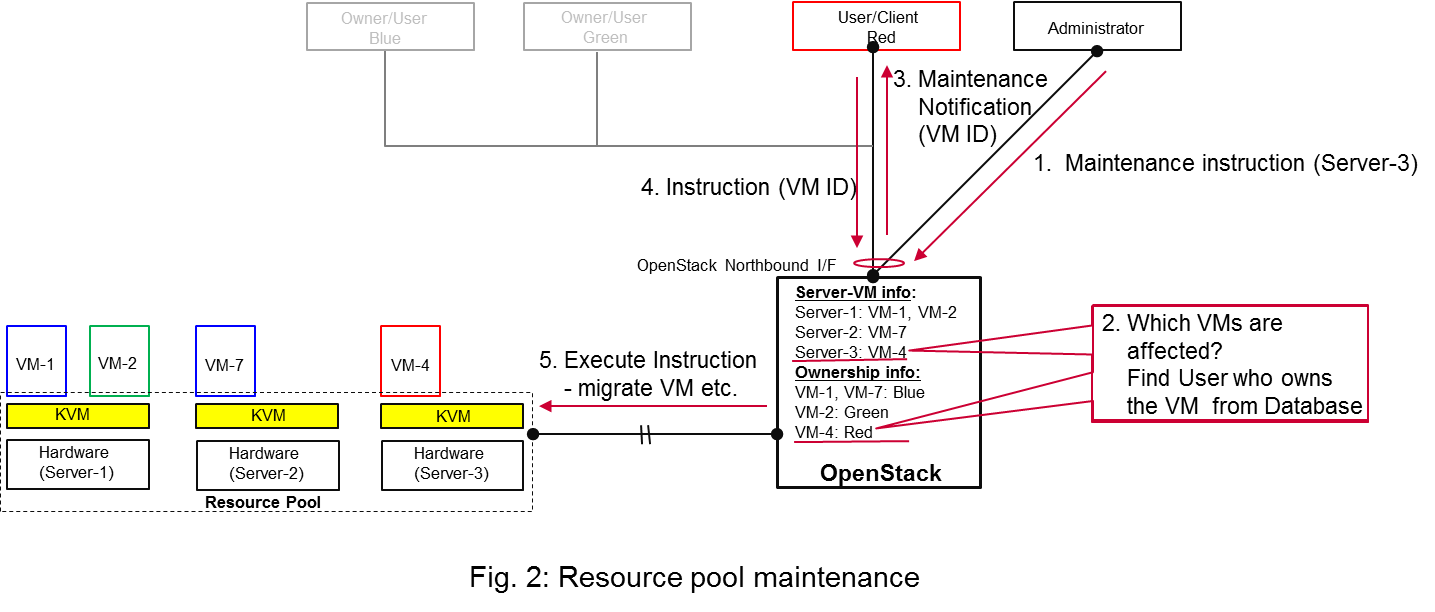

A high-level view of the maintenance procedure is presented in Fig. 2. VIM/OpenStack, through its northbound interface, receives a maintenance notification (1. Maintenance Instruction in Fig. 2) from the Administrator (e.g. a network operator) who mentions which hardware is subject to such maintenance. Maintenance operation includes replacement/upgrade of hardware, update/upgrade of the hypervisor/host OS etc.

The consequent steps to enable User/Client perform ACT-SBY switching are very similar to the fault management scenario. From VIM/OpenStacks internal database, it finds out which VMs are running on those particular Hardware Servers and who are the managers of those VMs (Step 2 in Fig. 2). The VIM/OpenStack then informs the respective Users/Clients (VNFMs or NFVO) (3. Maintenance Notification in Fig. 2). The Users/Clients then take necessary actions (e.g. switch to SBY, switch VNF forwarding graphs) and then notifies (4. Instruction in Fig. 2). Upon receiving such notification, the VIM/OpenStack takes necessary actions (5. Execute Instruction in Fig. 2) to empty the Hardware Servers so that consequent maintenance operation could be performed. Due to such similarity for Step 2~5, the maintenance procedure and the fault management procedure are kept in the same project.