get_started:lflab_maaspoc

This is an old revision of the document!

Table of Contents

Proposed MAAS Poc

this page docs the POC proposed in Sept 2015 - see details of how to get started with MaaS here: https://wiki.opnfv.org/pharos/maas_getting_started_guide

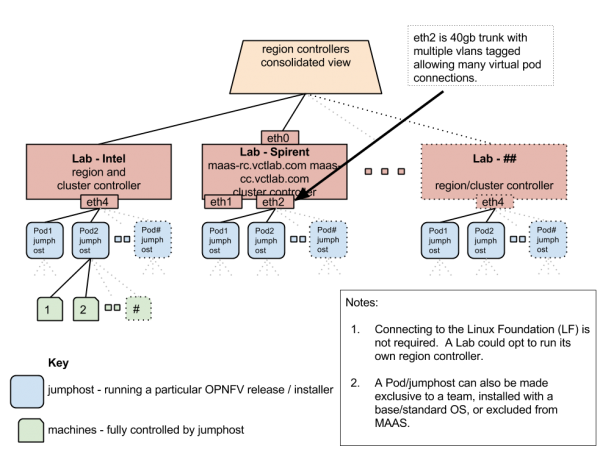

Above Diagram represent the End state diagram of different labs. Basic idea is to use the community lab to deploy different installer repeatedly and reliably. Which will increase the usage of individual community lab and will be true integration lab with Linux Foundation lab.

Network assumption:

- MAAS regional controller and cluster controller will have connectivity to community lab where deployment will take place.

- Each community data centre expected to have regional and cluster controller on interface (say eth4) which is connected to different pods jump hosts within data centre at eth4 only.

- Jump hosts and other deployment nodes needs to configure the PXE boot first with eth0 first then eth4 in sequence then to hard disk.

- Cluster controller will run DHCP/DNS/TFTP/PXE on eth4 interface network to control the jump host.

MaaS Workflow Idea

- jenkins server kicks off maas workflow job to initiate pod build out in a lab

- maas regional controller receives job and sends task to cluster controller for the lab

- The cluster controller builds the jump box for OPNFV installer of choice: Fuel, Foreman, RDO, APEX, JOID, COMPASS, etc…

- The jumpbox builds out an OPNFV for the compute nodes of the pod with correct OS and SDN controller: Ubuntu, CentOS, Daylight, Contrail, Midonet, etc

- FUNCTEST jobs run to validate the environment

- A quick QTIP benchmark is run to provide a performance score

- More in depth tests can be run as desired: vsperf, storage, yardstick, etc

- When testing is complete the servers are erased and the pod is rebuilt with the new parameters

get_started/lflab_maaspoc.1445305636.txt.gz · Last modified: 2015/10/20 01:47 by Narinder Gupta