This is an old revision of the document!

Table of Contents

NFV Hypervisors-KVM

Latest Status

This is a new project submission and is in the discussion phase. Updates coming soon.

Project Name:

- Proposed name for the project: "NFV Hypervisors-KVM"

- Proposed name for the repository:

kvmfornfv - Project Categories:

- Requirements

- Collaborative Development

Project description

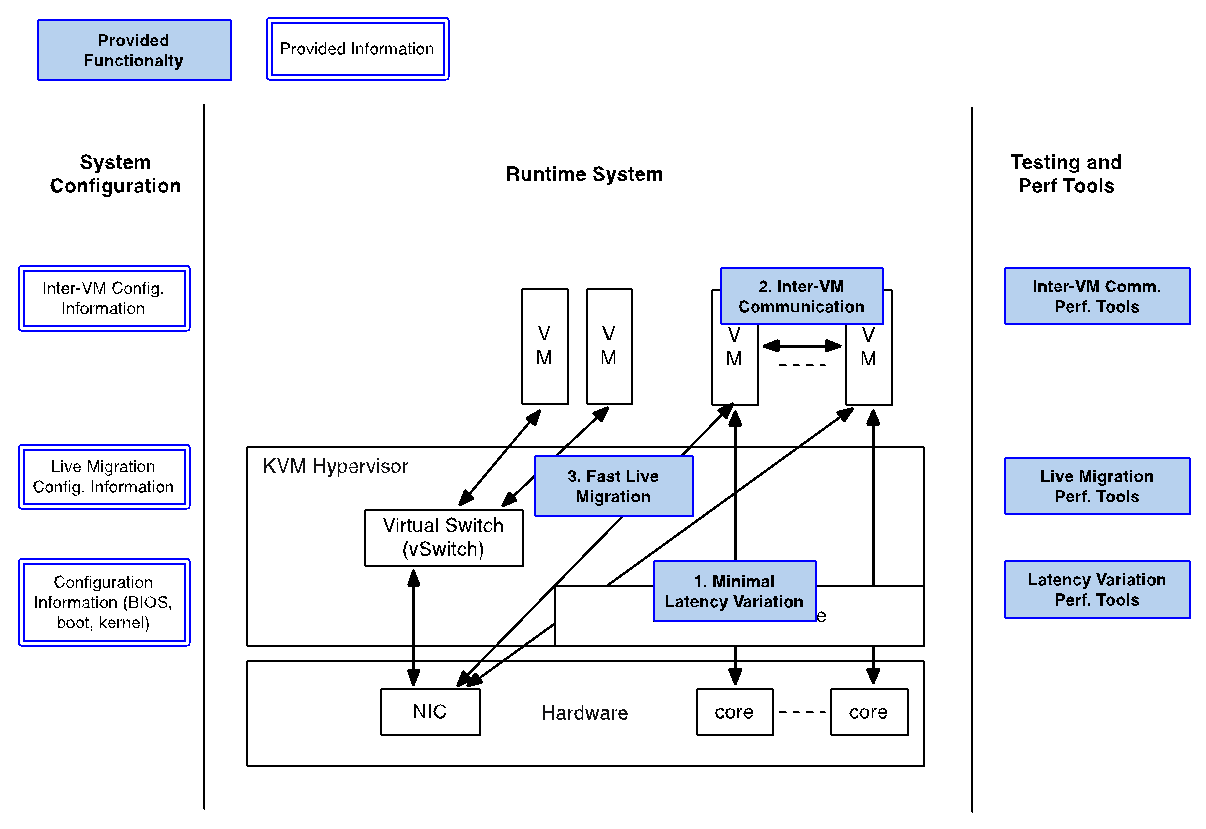

The NFV hypervisors provide crucial functionality in the NFV Infrastructure (NFVI). The existing hypervisors, however, are not necessarily designed or targeted to meet the requirements for the NFVI, and we need to make collaborative efforts toward enabling the NFV features.

In this project, we focus on the KVM hypervisor to enhance it for NFV, by looking at the following areas initially:

- Minimal Interrupt latency variation for data plane VNFs:

- Minimal Timing Variation for Timing correctness of real-time VNFs

- Minimal packet latency variation for data-plane VNFs

- Inter-VM communication,

- Fast live migration

Some of the above items would require software development and/or specific hardware features, and some need just configurations information for the system (hardware, BIOS, OS, etc.).

We include a requirements gathering stage as a formal part of the project. For each subproject, we will start with an organized requirement stage so that we can determine specific use cases (e.g. what kind of VMs should be live migrated) and requirements (e.g. interrupt latency, jitters, Mpps, migration-time, down-time, etc.) to set out the performance goals.

Potential future projects would include:

- Dynamic scaling (via scale-out) using VM instantiation

- Fast live migration for SR-IOV

Scope

The output of this project will provide for each area:

- A list of the performance goals, which will be obtained by the OPNFV members (as described above)

- A set of comprehensive instructions for the system configurations (hardware features, BIOS setup, kernel parameters, VM configuration, options to QEMU/KVM, etc.)

- The above features to the upstream of Linux, the real-time patch set, KVM, QEMU, libvirt, and

- Performance and interrupt latency measurement tools.

Minimal Interrupt latency variation for data plane VNFs

Processing performance and latencies depend on a number of factors, including the CPUs (frequency, power management features, etc.), micro-architectural resources, the cache hierarchy and sizes, memory (and hierarchy, such as NUMA) and speed, inter-connects, I/O and I/O NUMA, devices, etc.

There are two separate types of latencies to minimize:

- Minimal Timing Variation for Timing correctness of real-time VNFs – timing correctness for scheduling operations (such as Radio scheduling), and

- Minimal packet latency variation for data-plane VNFs – packet delay variation, which applies to packet processing.

For a VM, interrupt latency (time between arrival of H/W interrupt and invocation of the interrupt handler in the VM), for example, can be either of the above or both, depending on the type of the device. Interrupt latency with a (virtual) timer can cause timing correctness issues with real-time VNFs even if they only use polling for packet processing.

We assume that the VNFs are implemented properly to minimize interrupt latency variation within the VMs, but we have additional causes of latency variation on KVM:

- Asynchronous (e.g. external interrupts) and synchronous (e.g. instructions) VM exits and handling in KVM (and kernel routines called), which may have loops and spin locks

- Interrupt handling in the host Linux and KVM, scheduling and virtual interrupt delivery to VNFs

- Potential VM exit (e.g. EOI) in the interrupt service routines in VNFs, and

- Exit to the user-level (e.g. QEMU)

To minimize such latency variation and thus jitters, we take the following steps (with some in parallel):

- Statically and exclusively assign hardware resources (CPUs, memory, caches,) to the VNFs.

- Pre-allocate huge pages (e.g. 1 GB/2MB pages) and guest-to-host mapping, e.g. EPT (Extended Page Table) page tables, to minimize or mitigate latency from misses in caches,

- Use the host Linux configured for hard real-time and packet latency ,

- Check the set of virtual devices used by the VMs to optimize or eliminate virtualization overhead if applicable,

- Use advanced hardware virtualization features that can reduce or eliminate VM exits, if present, and

- Inspect the code paths in KVM and associated kernel services to eliminate code that can cause latencies (e.g. loops and spin locks).

- Measure latencies intensively. We leverage the existing testing methods. OSADL, for example, defines industry tests for timing correctness.

Inter-VM Communication

The section “Hypervisor domain” in (“Network Functions Virtualization (NFV); Infrastructure Overview”, ETSI GS NFV-INF 001 V1.1.1 (2015-01)) suggests that the NFV hypervisor architecture have “direct memory mapped polled drivers for inter VM communications again using user mode instructions requiring no 'context switching'.”

In terms of the programming model for inter-VM communication, we basically have the following options:

- Use the framework-specific API. The framework, such as DPDK, hides the VM boundaries using the libraries or API.

- Use the generic network API. The OS in the VMs provides the conventional network communication functionality, including inter-VM communication.

Data plane VNFs typically would need to use the option 1, as pointed above. The DPDK IVSHMEM library in DPDK, for example, uses shared memory (called “ivshmem”) across the VMs (See http://dpdk.org/doc/guides/prog_guide/ivshmem_lib.html). This is one of the optimal implementations available in KVM, but the ivshmem feature is not necessarily well received or maintained by the KVM/QEMU community. In addition, “security implications need to be carefully evaluated” as pointed out there.

For the option 2, it is possible for the VMs, vSwitch, or the KVM hypervisor to lower overhead and latency using software (e.g. shared memory) or hardware virtualization features. Some of the techniques developed for such purposes are useful for the option 1 as well. For example, the virtio Poll Mode Driver (PMD) (http://dpdk.org/doc/guides/nics/virtio.html) and the vhost library (such as vhost-user) in DPDK can be helpful when providing fast inter-VM communication and VM-host communication. In addition, hardware virtualization features, such as VMFUNC (See the KVM Forum 2014 pdf below for details) could be helpful when providing protected inter-VM communication by mitigating security issues with ivshmem.

For this feature, therefore, we need to take the following steps:

- Evaluate and compare the options (e.g. ivshmem, vhost-user, VMFUNC, etc.) in terms of performance, interface/API, usability/programing model, security, maintenance, etc. For performance evaluation of ivshmem vs. vhost-user, we will depend on output from the other project “Characterize vSwitch Performance for Telco NFV Use Cases”.

- Determine the best option(s). It's possible to have multiple of them depending the use cases.

- Deliver the patches to complete the option(s) above with the configuration info/directions and performance measurement tools.

“Extending KVM Models Toward High-Performance NFV”, KVM Forum 2014, http://www.linux-kvm.org/images/1/1d/01x05-NFV.pdf

Fast Live Migration

Live migration is a desirable feature for NFV as well, but it is unlikely that the current implementation of live migration on KVM meet the requirements for NFV in the two key aspects:

- Time to complete live migration,

- Downtime

The “time to complete live migration” needs to measure the time to put the VNF back in service. The “downtime” means the time delay of the VNF service when the VNF service is suspended on the source and resumed back on the destination.

The project will focus on the above aspects, and it will provide patches to upstream, configuration info/directions and performance measurement tools. Our experience and data show that we can improve live migration performance by using multi-threaded transferring, data compression, and hardware features (e.g. PML, Page Modification Logging. See http://www.intel.com/content/dam/www/public/us/en/documents/white-papers/page-modification-logging-vmm-white-paper.pdf, for example)

In general, live migration is not necessarily guaranteed to be carried out completely, depending on the workload of the VM and bandwidth of the network used to transfer the on-going VM state changes to the destination. Upon such failures, the VM just stays at the source node. To increase success probability, one of the effective ways is to choose the time period when the workload is known to be low. This can be achieved by the orchestration or management level in an automated fashion, and it is outside scope of this project.

We see more complex types of live migration beyond the above area. For example, memory of VMs can be accessed directly by a vSwitch for packet transferring. In this case, the vSwitch needs to be notified for live migration. Also, the vSwitch on the destination machine needs to include the VM. We will discuss whether this kind of live migration is required at the requirements gathering stage, and then decide how we support it if any.

The other significant limitation with the current live migration is lack of support for SR-IOV. This is mainly due to missing H/W features with IOMMU and devices that are required to achieve live migration. Once we have line of sight for software workarounds, we will include SR-IOV support to this subproject.

Testability

- Specify testing and integration like interoperability, scalability, high availability

- The project plans to leverage test infrastructure as provided by Projects VSPERF and Pharos in OPNFV.

Documentation

- API Docs

- Functional block description

Dependencies

- OPNFV Project: “Characterize vSwitch Performance for Telco NFV Use Cases” (VSPERF) for performance evaluation of ivshmem vs. vhost-user.

- OPNFV Project: “Pharos” for Test Bed Infrastructure, and possibly “Yardstick” for infrastructure verification.

- There are currently no similar projects underway in OPNFV or in an upstream project

- The relevant upstream project to be influenced here is QEMU/KVM, and libvirt.

- The next release of QEMU/KVM is targeted for August 2015. Project will attempt to influence this timeline. Release cadence for QEMU/KVM is every 4/5 months. The libvirt project has a one-month cadence with a new release on the 1st of every month.

- Concerning liaisons with other fora, there should be at least some information exchange between OPNFV and ETSI NFV with respect to the goals of this project.

- In terms of HW dependencies, the aim is to use standard IA Server hardware for this project, as provided by OPNFV Pharos.

Committers and Contributors

Names and affiliations of the committers <Developers>:

- Yunhong Jiang: yunhong.jiang@intel.com

- Rik van Riel: riel@redhat.com

- Yang Zhang: yang.z.zhang@intel.com

- Bruce Ashfield: bruce.ashfield@windriver.com

- Weidong Han: hanweidong@huawei.com

- Yuxin Zhuang: zhuangyuxin@huawei.com

- Guangrong Xiao: guangrong.xiao@intel.com

Names and affiliations of any other contributors <Project lead or manager>:

- Don Dugger: donald.d.dugger@intel.com

- Jun Nakajima: jun.nakajima@intel.com

- John Browne: john.j.browne@intel.com

- Mike Lynch: michael.a.lynch@intel.com

- Manish Jaggi: Manish.Jaggi@caviumnetworks.com

- Kin-Yip Liu: kin-yip.liu@caviumnetworks.com

Planned Deliverables

Patches to the relevant upstream project (QEMU/KVM). If the August 2015 release cannot be intercepted, project will aim to intercept December/January release. That may then push the inclusion of this work into OPNFV C Release.

Proposed Release Schedule

Would aim to intersect OPNFV B release in December 2015