Table of Contents

Rescuer

Proposed name for the project: RescuerProposed name for the repository: rescuerProject Categories: Requirement

Project description:

Disaster Recovery (DR) is a very important issue in NFV, for example when dealing with burst hours during holidays , shut down or malfunction of a VIM instance or even a complete site may cause severe service interruption, or a complete service termination. without a strong infrastructure level disaster recovery support. Therefore this project is proposed to develop use cases, requirements, as well as upstream project blue prints, with focus on how to make infrastructure DR-capable to keep the service continuity meet the requirement defined by the terms like RPO or RTO when extreme scenario strikes.

ETSI NFV Requirements

From the perspective of resiliency (with respect to disaster recovery and flexibility in resource usability), it is desirable to be able to locate the standby node in a topologically different site and maintain connectivity. In order to support disaster recovery for a certain critical functionality, the NFVI resources needed by the VNF should be located in different geographic locations; therefore, the implementation of NFV should allow a geographically redundant deployment.

During a disaster, multiple VNFs in a NFVI-PoP may fail; in more severe cases, the entire NFVI-PoP or multiple NFVI-PoPs may fail. Accordingly, the recovery process for such extreme scenarios needs to take into account additional factors that are not present for the single VNF failure scenario. The restoration and continuity would be done at a WAN scope (compared to a HA recovery done at a LAN scope, described above). They could also transcend administrative and regulatory boundaries, and involve restoring service over a possibly different NFV-MANO environment. Depending on the severity of the situation, it is possible that virtually all telecommunications traffic/sessions terminating at the impacted NFVI-PoP may be cutoff prematurely. Further, new traffic sessions intended for end users associated with the impacted NFVI-PoP may be blocked by the network operator depending on policy restrictions. As a result, there could be negative impacts on service availability and reliability which need to be mitigated. At the same time, all traffic/sessions that traverse the impacted NFVI-PoP intended for termination at other NFVI-PoPs need to be successfully re-routed around the disaster area. Accordingly:

- Network Operators should provide the Disaster Recovery requirements and NFV-MANO should design and develop the Disaster Recovery policies such that:

a. Include the designation of regional disaster recovery sites that have sufficient VNF resources and comply with any special regulations including geographical location.

b. Define prioritized lists of VNFs that are considered vital and need to be replaced as swiftly as possible. The prioritized lists should track the Service Availability levels from sub-clause 6.3. These critical VNFs need to be instantiated and placed in proper standby mode in the designated disaster recovery sites.

c. Install processes to activate and prepare the appropriate disaster recovery site to “takeover” the impacted NFVI-PoP VNFs including bringing the set of critical VNFs on-line, instantiation/activation of additional standby redundant VNFs as needed, restoration of data and reconfiguration of associated service chains at the designated disaster recovery site as soon as conditions permit.

- Network Operators should be able to modify the default Disaster Recovery policies defined by NFV-MANO, if needed

- The designated disaster recovery sites need to have the latest state information on each of the NFVI-PoP locations in the regional area conveyed to them on a regular schedule. This enables the disaster recovery site to be prepared to the extent possible, when a disaster hits one of the sites. Appropriate information related to all VNFs at the failed NFVI-PoP is expected to be conveyed to the designated disaster recovery site at specified frequency intervals.

- After the disaster situation recedes, Network Operators should restore the impacted NFVI-PoP back to its original state as swiftly as possible, or deploy a new NFVI-PoP to replace the impacted NFVI-PoP based on the comprehensive assessment of the situation. All on-site Service Chains must be reconfigured by instantiating fresh VNFs at the original location. All redundant VNFs activated at the designated Disaster Recovery site to support the disaster condition must be de-linked from the on-site Service Chains by draining and re-directing traffic as needed to maintain service continuity. The redundant VNFs are then placed on standby mode per disaster recovery policy.

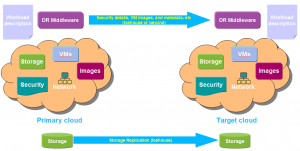

DR In OpenStack

Disaster Recovery (DR) for OpenStack is an umbrella topic that describes what needs to be done for applications and services (generally referred to as workload) running in an OpenStack cloud to survive a large scale disaster. Providing DR for a workload is a complex task involving infrastructure, software and an understanding of the workload. To enable recovery following a disaster, the administrator needs to execute a complex set of provisioning operations that will mimic the day-to-day setup in a different environment. Enabling DR for OpenStack hosted workloads requires enablement (APIs) in OpenStack components (e.g., Cinder) and tools which may be outside of OpenStack (e.g., scripts) to invoke, orchestrate and leverage the component specific APIs.

Disaster Recovery should include support for:

Capturing the metadata of the cloud management stack, relevant for the protected workloads/resources: either as point-in-time snapshots of the metadata, or as continuous replication of the metadata. Making available the VM images needed to run the hosted workload on the target cloud. Replication of the workload data using storage replication, application level replication, or backup/restore. We note that metadata changes are less frequent than application data changes, and different mechanisms can handle replication of different portions of the metadata and data (volumes, images, etc)

The approach is built around:

Identify required enablement and missing features in OpenStack projects Create enablement in specific OpenStack projects Create orchestration scripts to demonstrate DR When resources to be protected are logically associated with a workload (or a set of inter-related workloads), both the replication and the recovery processes should be able to incorporate hooks to ensure consistency of the replicated data & metadata, as well as to enable customization (automated or manual) of the individual workload components at recovery site. Heat can be used to represent such workloads, as well as to automate the above processes (when applicable).

Scope:

Describe the problem being solved by project

The project aims to develop the requirements and use cases for NFVI and VIM on supporting Telco grade DR implementation :

- Requirements for VIM and NFVI to support Multisite DR, including:

a. For active-active, active-hot standby, active-cold standby design b. Replication of all configuration and metadata required by an application - Neutron, Cinder, Nova, etc. c. Ability to ensure consistency of the replicated data & metadata d. Supporting a wide range of data replication methods: Storage systems based replication, Hypervisor assisted (possibly between heterogeneous storage systems). For example, using DRBD or Qemu based replication, Backup and Restore methods, Pluggable application level replication methods

- DR Use Cases to provide more requirements.

- Formulate BPs that would reflect the requirements, and implement those BPs in the upstream community.

- ..

Specify any interface/API specification proposed

New DR related API specification would be proposed.

Identity a list of features and functionality will be developed.

- Requirements for infrastructure to support Telco grade DR implementation

- Use Cases for infrastructure level support Telco grade DR implementation

- Upstream feature development according to the developed requirements.

Identify what is in or out of scope. So during the development phase, it helps reduce discussion.

In scope: infrastructure level support for Telco grade DR implementation

Out of scope: DR Policy making, DR Decision and Planning making at the upper level.

Describe how the project is extensible in future

This project is extendable for future functions.

Testability: ''(optional, Project Categories: Integration & Testing)''

N/A.

Documentation: ''(optional, Project Categories: Documention)''

This project intends to produce the following documentation supporting the meta distribution:

- Installation Guide

- User Guide

- Developer Guide

- DR API Document

Dependencies:

Identify similar projects is underway or being proposed in OPNFV or upstream project

OPNFV: Multisite, HA For VNF, Doctor.

Identify any open source upstream projects and release timeline.

OpenStack

It would be aligned with OpenStack release schedule (per cycle) and OPNFV schedule.

Identify any specific development be staged with respect to the upstream project and releases.

N/A

Are there any external fora or standard development organization dependencies. If possible, list and informative and normative reference specifications.

ETSI NFV REL

If project is an integration and test, identify hardware dependency.

None

Committers and Contributors:

Names and affiliations of the committers:

Guoguang Li, (liguoguang@huawei.com);

Zhipeng(Howard) Huang, (huangzhipeng@huawei.com);

Any other contributors:

TBD

Planned deliverables

Project release package as OPNFV or open source upstream projects

As upstream projects

Project deliverables with multiple dependencies across other project categories

None

Proposed Release Schedule:

This project is planned for the third release of OPNFV platform.