This is an old revision of the document!

Table of Contents

Target System State

This wiki defines the target system state that is created by a successful execution of the BGS. This target system state should be independent from the installer approach taken.

Hardware setup

The Pharos specification describes a "standard" OPNFV deployment environment (compute, network, storage)

Key Software Components and associated versions

| Component Type | Flavor | Version | Notes |

|---|---|---|---|

| Base OS | CentOS | 7 | Including current updates for all installed packages |

| SDN Controller | OpenDaylight | Helium SR2 | With Open vSwitch |

| Infrastructure controller | OpenStack | Juno |

CentOS 7

Install only core components on all servers. Additional dependencies will be included when specific packages are added. This list is derived from with fuel specific components removed OpenStack Fuel Template. Puppet is included since OpenSteak, Foreman and Fuel installers are all using Puppet.

| Package | Version | Note |

|---|---|---|

| authconfig | ||

| bind-utils | ||

| cronie | ||

| crontabs | ||

| curl | ||

| daemonize | ||

| dhcp | ||

| gdisk | ||

| lrzip | ||

| lsof | ||

| man | ||

| mlocate | ||

| nmap-ncat | ||

| ntp | ||

| openssh-clients | ||

| policycoreutils | ||

| rsync | ||

| ruby21-puppet | ||

| ruby21-rubygem-netaddr | ||

| ruby21-rubygem-openstack | ||

| selinux-policy-targeted | ||

| strace | ||

| subscription-manager | ||

| sysstat | ||

| system-config-firewall-base | ||

| tcpdump | ||

| telnet | ||

| vim-enhanced | ||

| virt-what | ||

| wget | ||

| yum |

OpenStack Juno

OpenStack Juno Components OpenStack Juno

| Component | Package | Version | Notes |

|---|---|---|---|

| Nova | Juno | ||

| Glance | Juno | ||

| Neutron | Juno | ||

| Keystone | Juno | ||

| MySQL | Juno | ||

| RabbitMQ | Juno | ||

| Pacemaker cluster stack | Juno | ||

| Corosync | Juno | ||

| Ceilometer | Juno | NOT CONFIRMED - UNSURE OF STATE | |

| Horizon | Juno | NOT CONFIRMED - UNSURE OF STATE | |

| Heat | Juno | NOT CONFIRMED - UNSURE OF STATE | |

| Tempest | Juno | NOT CONFIRMED - UNSURE OF STATE | |

| Robot | Juno | What about documenting on the CI page and not the getting started page? |

OpenDaylight Helium SR2

| Component | Sub-Component | Version | Notes |

|---|---|---|---|

| odl-dlux-all | 0.1.2-Helium-SR2 | ||

| odl-config-persister-all | 0.2.6-Helium-SR2 | OpenDaylight :: Config Persister:: All | |

| odl-aaa-all | 0.1.2-Helium-SR2 | OpenDaylight :: AAA :: Authentication :: All Featu | |

| odl-ovsdb-all | 1.0.2-Helium-SR2 | OpenDaylight :: OVSDB :: all | |

| odl-ttp-all | 0.0.3-Helium-SR2 | OpenDaylight :: ttp :: All | |

| odl-openflowplugin-all | 0.0.5-Helium-SR2 | OpenDaylight :: Openflow Plugin :: All | |

| odl-adsal-compatibility-all | 1.4.4-Helium-SR2 | OpenDaylight :: controller :: All | |

| odl-tcpmd5-all | 1.0.2-Helium-SR2 | ||

| odl-adsal-all | 0.8.3-Helium-SR2 | OpenDaylight AD-SAL All Features | |

| odl-config-all | 0.2.7-Helium-SR2 | OpenDaylight :: Config :: All | |

| odl-netconf-all | 0.2.7-Helium-SR2 | OpenDaylight :: Netconf :: All | |

| odl-base-all | 1.4.4-Helium-SR2 | OpenDaylight Controller | |

| odl-mdsal-all | 1.1.2-Helium-SR2 | OpenDaylight :: MDSAL :: All | |

| odl-yangtools-all | 0.6.4-Helium-SR2 | OpenDaylight Yangtools All | |

| odl-restconf-all | 1.1.2-Helium-SR2 | OpenDaylight :: Restconf :: All | |

| odl-integration-compatible-with-all | 0.2.2-Helium-SR2 | ||

| odl-netconf-connector-all | 1.1.2-Helium-SR2 | OpenDaylight :: Netconf Connector :: All | |

| odl-akka-all | 1.4.4-Helium-SR2 | OpenDaylight :: Akka :: All |

Additional Components

| Component | Package | Version | Notes |

|---|---|---|---|

| Hypervisor: KVM | |||

| Forwarder: OVS | 2.3.0 | ||

| Node config: Puppet | |||

| Example VNF1: Linux | Centos | 7 | |

| Example VNF2: OpenWRT | version 14.07 – barrier braker) | ||

| Container Provider: Docker | docker.io (lxc-docker) | latest | FUEL delivery of ODL |

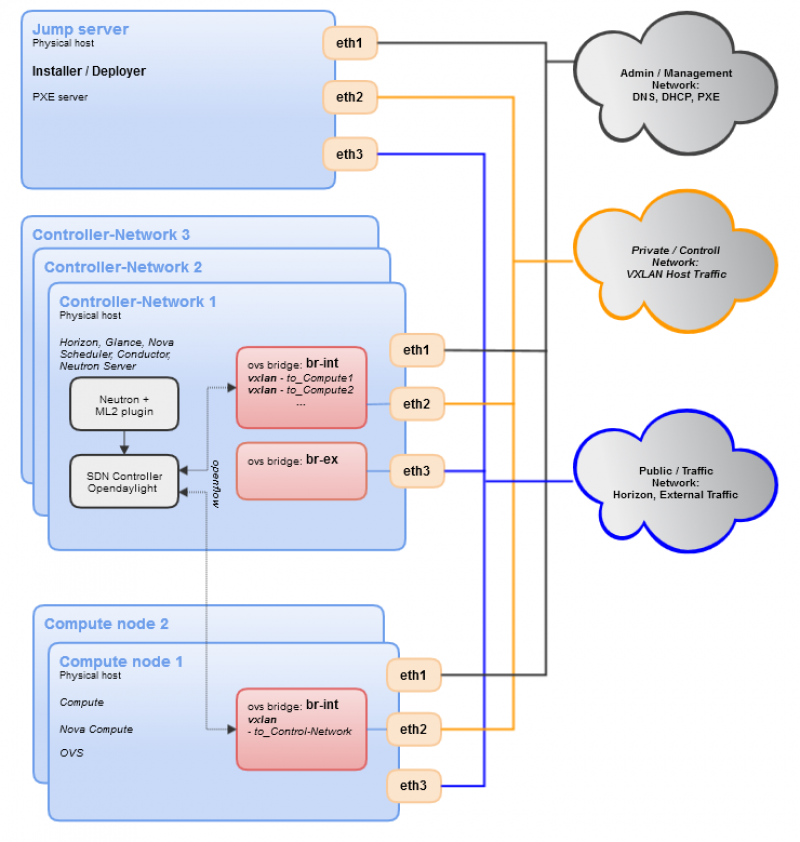

Network setup

Describe which L2 segments are configured (i.e. for management, control, use by client VNFs, etc.), how these segments are realized (e.g. VXLAN between OVSs) and which segment numbering (e.g. VLAN IDs, VXLAN IDs) are used. Describe which IP addresses are used, which DNS entries (if any are configured), default gateways, etc. Describe if/how segments are interconnected etc.

List and purpose of used subnets as defined here: Network addressing and topology blueprint - FOREMAN

- Admin (Management) - 192.168.0.0/24 - manage stack from Fuel/Foreman

- Private (Control) - 192.168.11.0/24 - API traffic and inner-tenant communication

- Storage - 192.168.12.0/24 - separate VLAN for storage

- Public (Traffic) - management IP of Ostack/ODL + traffic

Network addressing and topology blueprint - FUEL

- Admin (PXE) - 10.20.0.0/16 - FUEL Admin network (PXE Boot, cobbler/nailgun/mcollective work)

- Public (Tagged VLAN) - Subnet is up to the users network - this is used for external communication of the Control nodes as well as for L3 Nating (configurable subnet range)

- Storage (Tagged VLAN)) - 192.168.1.0/24 (default)

- MGMT (Tagged VLAN) - 192.168.0.0/24 (default) used for Openstack Communication

Currently there are two approaches to VLAN tagging:

- Fuel - tagging/untagging is done on the Linux hosts, the switch should be configured to pass tagged traffic.

- Foreman - VLANs are configured on switch and packets are coming to/from Linux hosts untagged.

There was agreed not use VLAN tagging unless the target hardware lacked the appropriate number of interfaces. It is still viable however for users who want to implement the target system in a restricted hardware environment to use tagging.

Following picture shows ODL connects to Neutron through ML2 pugin and to nova-compute through OVS bridge. (not yet finished, ceph storage will be added, approach with ODL in docker container will be added.)

Storage setup

Local storage will be used.

OUTSTANDING - AGREEMENT ON USE OF CEPH - this needs confirmation from the FUNCTEST team

NTP setup

Multiple labs will eventually be working together across geographic boundaries.

- All systems should use multiple NTP Servers

- Timezone should be set to UTC for all systems

- Centralized logging should be configured with UTC timezone

- Describe the detailed setup