This is an old revision of the document!

Huawei is hosting server clusters environment for OPNFV projects (Boot Get Started [BGS], Continuous Integration [Octopus]…) accessible globally via VPN over Internet

According to the network topology of BGS and Octopus projects, this server cluster environment in Xi’an, China (one of Huawei global NFV Open Labs) includes three controller nodes and two compute nodes. And the hardware configuration for each controller or compute node is the same, i.e. CPU: 2*Ivy Bridge-EP 10 core, 2.4GHz; RAM: 128GB; Hard Disk: 2*600GB. In order to support internet access and load balance purpose, other necessary equipments are also included in it, e.g. the Gateway router, Jump server and etc.

While to be able to connect this environment, a remote user/client (e.g. PC with Internet access using Browser or ssh) should be able to connect to internet (http://publicip<addr>) and then be allocated a private IP address (192.168.x.y) from LNS (L2TP Network Server) via L2TP over IPsec. The remote user/client could use VPN for connection, e.g. download “Secoway VPN Client” software (Copyright of Huawei, Click “here” for download and use for OPNFV freely) and make configuration once it completes to register and apply for the access. An email with the guideline will be sent to you to show how to configure the client and make sure you can access the environment. The server cluster environment is illustrated below for the project BGS:

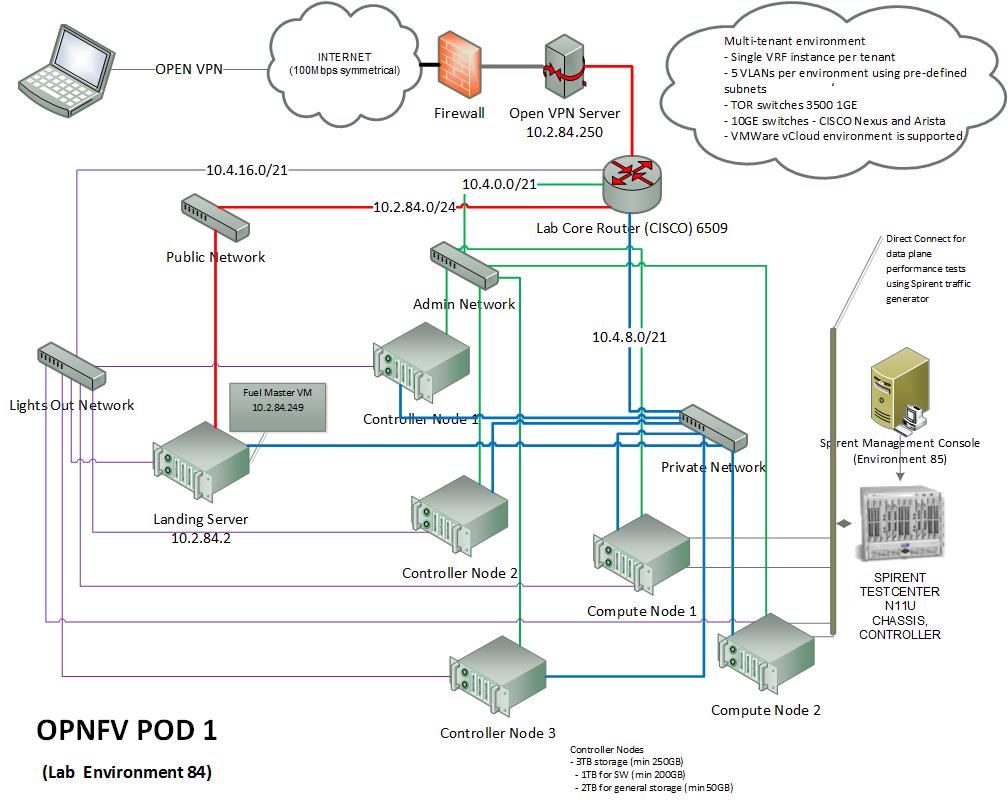

Currently there are 3 "PODs" (a BGS POD has with 6 servers) operational and short term plan is stand-up 5 PODs. The first 2 PODs are being used by BGS project (which is driving compute, network and storage requirements). For support reasons all PODs will have identical network configurations however exceptions can be made if a project has a specific need.

Current POD assignments …

- POD 1 - Bootstrap/Get started - Development

- POD 2 - Bootstrap/Get started - Run/Verify

- POD 3 - Characterize vSwitch Performance (special requirements for test equipment and network configurations)

- POD 4 - Available April - to be assigned

- POD 5 - Available April - to be assigned

Remote access uses OpenVPN via a 100 Mbps (symmetrical) internet link … VPN Quickstart instructions are here:opnfv_intel_hf_testbed_-_quickstart_vpn_.docx

All servers are PXE boot enabled and can also be accessed "out-of-band" using the lights-out network with RMM/BMC. Servers are current or recent generation Xeon-DP … specs for each POD/server to be documented here.

BGS environment details are here: https://wiki.opnfv.org/get_started/get_started_work_environment. The basic compute setup is as follows:

- 3 x Control nodes (for HA/clustered setup of OpenStack and OpenDaylight)

- 2 x Compute node (to bring up/run VNFs)

- 1 x Jump Server/Landing Server in which the installer runs in a VM

Each POD (environment) supports 5 VLANs using pre-defined subnets. BGS networks are 1) Public 2) Private 3) Admin 4) Lights-out. Configuration details are here opnfv_intel_hf_testbed_-_configuration.xlsx Information includes physical network configuration (IPs etc.), NIC MAC addresses, IPMI logins, etc. Full specs for each node will also be captured here.

Collaborating

- Best practices for collaborating in the test environment are being developed and will be documented here

- The landing server is provisioned with CentOS 7. The project decides configurations for other servers and provisions accordingly

- The landing server has a VNC server for shared VNC sessions

- While VNC allows screens to be shared an audio session may be useful … setup an audio-bridge or try a collaboration tool such as Lync, WebEx, Google Hangouts, Blue Jeans, etc.

Figure 1: Intel OPNFV test-bed environment - overview of POD 1

Figure 1: Intel OPNFV test-bed environment - overview of POD 1

TAGS: BGS, Bootstrap Getting Started, Intel,Test, Lab