This is an old revision of the document!

Note working page ...no decision yet...to be discussed during functest and test weekly meeting

Admin

It was decided to adopt the Mongo DB/API/Json approach dashboard meeting minutes

Introduction

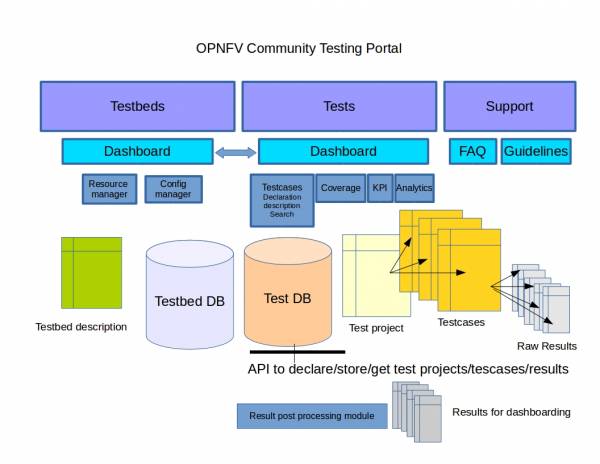

All the test projects generate results in different format. The goal of a testing dashboard is to provide a consistent view of the different tests from the different projects.

Overview

We may describe the overall system dealing with test and testbeds as follow:

We may distinguish

- the data collection

- the production of the test dashboard

Test collection

The test collection API is under review, it consists in a simple Rest API associated with simple json format collected in a Mongo DB.

Most of the tests will be run from the CI but test collection shall not affect CI in any way that is one of the reason to externalize data collection and not using a jenkins Plugin.

Dashboard

A module shall extract the raw results pushed by the test projects to create a testing dashboard. This dashboard shall be able to show:

- The number of testcases/test projects/ people involved/organization involved

- All the tests executed on a a given POD

- All the tests from a test project

- All the testcases executed on all the POD

- All the testcases in critical state for version X installed with installer I on testbed T

- ….

It shall be possible to filter per:

- POD

- Test Project

- Testcase

- OPNFV version

- Installer

- …

For each test case we shall be able to see:

- Error rate versus time

- Duration versus time

- Packet loss

- Delay

- ….

And also the severity (to be commonly agreed) of the errors…

| description | comment | |

|---|---|---|

| critical | testcase failed on 80% of the installers? | |

| major | ||

| minor |

Role of the test projects

Each test project shall:

- declare itself using test collection API (see Description)

- declare the testcases

- push the results into the test DB

- Process the raw result to provide a set of json pre-formated result file according to what the project wants to show. These json files will be used to generate automatically the dashboard (web page including js graph)

Open Questions

The test projects shall agree on

- the data model dealing with the test dashboarding (testbed / Testcase / test project / Test results / version / installers / ..)

- the filter criteria (pod, installer, version, date (30 days/1 year/since beginning of the project),..

- the label of the graphs (if it shall be customizable/possible to add new label but all the test projects shall use the same) ⇒ shall be described in the json for dashboard

Proposal for a simple home made solution

Based on Arno, related to the result collection API could look like:

- test-projects.json: description of the tests projects

- test-functest.json: description of functest test project

- test-functest-vping.json: raw result of 3 executions of vPing on 2 PODs (LF1 and LF2)

The projects are described as follow in the database: http://213.77.62.197:80/test_projects {

"meta": {

"total": 1,

"success": true

},

"test_projects": [

{

"test_cases": 0,

"_id": "55e05b08514bc52914149f2d",

"creation_date": "2015-08-28 12:58:48.602898",

"name": "functest",

"description": "OPNFV Functional testing project"

}

]}

If you want to know the testcases of this project http://213.77.62.197:80/test_projects/functest/cases

{

"meta": {

"total": 1,

"success": true

},

"test_cases": [

{

"_id": "55e05dba514bc52914149f2e",

"creation_date": "2015-08-28 13:10:18.478986",

"name": "vPing",

"description": "This test consist in send exchange PING requests between two created VMs"

}

]}

Functest used to run automatically 4 suites:

- vPing

- ODL

- Rally

- Rally

The results are available on Jenkins Functest calls the API to store the raw results of each tests.

curl -X POST -H "Content-Type: application/json" -d '{"details": {"timestart": 123456, "duration": 66, "status": "OK"}, "project_name": "functest", "pod_id": 1, "case_name": "vPing"}' http://213.77.62.197:80/results

From a Python client the API can be invoked as follow:

def push_results_to_db(payload):

url = TEST_DB

params = {"project_name": "functest", "case_name": "vPing", "pod_id": 1,

"details": payload}

headers = {'Content-Type': 'application/json'}

r = requests.post(url, data=json.dumps(params), headers=headers)

logger.debug(r)

with the payload var

{'timestart': start_time_ts, 'duration': duration,'status': test_status})

Please note that that can put whatever you want in your payload. For vPing we put the useful information, for Rally we can directly put all the json report already built by Rally (json result for Rally opnfv-authenticate scenario)

In the DB you can see the results

http://213.77.62.197:80/results?projects=functest&case=vPing

{

"meta": {

"total": 17

},

"test_results": [

{

"project_name": "functest",

"description": null,

"creation_date": "2015-08-28 16:43:00.965000",

"case_name": "vPing",

"details": {

"timestart": 123456,

"duration": 66,

"status": "OK"

},

"_id": "55e08f94514bc52b1791f949",

"pod_id": 1

},

..........

{

"project_name": "functest",

"description": null,

"creation_date": "2015-09-04 07:17:51.239000",

"case_name": "vPing",

"details": {

"timestart": 1441350977.858555,

"duration": 84.5,

"status": "OK"

},

"_id": "55e9459f514bc52b1791f959",

"pod_id": 1

}

]}

The raw results will be stored in the Mongo DB (json files)

A module will perform post processing (cron) to generate json file usable for dashboarding (like in bitergia). In the exemple we build json file for LF2 POD (we could do it for the last 30 days, last 365 days, since the beginning)

several files shall be produced according to the filters For vPing we shall produce:

- result-functest-vPing-<date>-<POD>-<installer>-<version>.json

with

- date = 30 (days), 365 (days), since the begining of the project

- POD = LF-test1, Dell-test2, Orange-test1,…

- installer = foreman, fuel, joid

- version = stable Arno, Arno SR1, Stable Brahmaputra, …

The result-functest-vPing.json (all installers/POD/version since first day of the project) could look like:

{

{

"description": "vPing results for Dashboard"

},

{

"info": {

"xlabel": "time",

"type": "graph",

"ylabel": "duration"

},

"data_set": [

{

"y": 66,

"x": "2015-08-28 16:43:00.965000"

},

.................

{

"y": 84.5,

"x": "2015-09-04 07:17:51.239000"

}

],

"name": "vPing duration"

},

{

"info": {

"type": "bar"

},

"data_set": [

{

"Nb Success": 17,

"Nb tests": 17

}

],

"name": "vPing status"

}}

Solutions for dashboarding

- home made solution (see below)

- bitergia, as used for code in OPNFV http://projects.bitergia.com/opnfv/browser/

- Logstash plugin for Jenkins that could be used with ELK solution

- remote Jenkins API + drupal + js lib (jqplot, ..)