This is an old revision of the document!

Table of Contents

Virtual Provide Edge

— Palani Chinnakannan 2014/11/10 20:31

Introduction

A virtual provider edge (vPE) is a virtual network function that provides the traditional provider edge functionality in the virtual environment. This project provides the testing methodology and test cases for integration testing of the OPNFV platform to host a generic VNF like vPE and measure the performance characteristics associated with the vPE traffic patterns. This project uses a L3VPN connectivity over a MPLS backbone network to provide private and secure communication to all devices belonging to a tenant located at multiple sites. The document covers the following elements:

- vPE Topology

- High level Software Architecture

- Hardware Requirements

- Software Components and Requirements

- NFVI Platform Function Test Cases

- Platform Performance Test cases

vPE Topology

The Basic vPE topology comprises of a single instance virtualized provider edge (vPE VNF) that provides L3VPN based connectivity between all sites of a given tenant. Advanced configuration comprises of three or more vPE VNFs providing connectivity to multiple sites belonging to a customer, with the sites distributed between the vPE VNFs. In both cases, multiple tenants are emulated each with 4 or more sites to provide a realistic traffic pattern to stress the NFVI infrastructure through the vPE VNFs. In the basic case, the vPE VNF is hosted on a single server, while in the advanced case, the vPE VNFs are tested on multiple servers, The environment also provides the traffic flow between the CE devices belonging to a tenant.

Basic vPE Topology

The following figure provides the basic topology for the vPE use case functional and performance testing.

The following are the salient aspect of this figure:

- The SUT (system under test) is the OPNFV platform as well as some elements of the VNF (vPE) like the QEMU, virtio etc., that are outside the bounds of the guest kernel, providing the interface to the VNF.

- A single instance of a open source or vendor provide vPE is instantiated on the OPNFV platform.

- The logical topology comprises of 4 tenants name VPN1 through VPN4. Each tenant owns four or more VPN sites, half of them directly connected to the VNF under test (VPNx-1, VPNx-2, …) and the rest connected to a emulated PE router (VPNx-3, VPNx-4, …). The above figure shows just four sites per tenant. However, the sites are scaled up in the performance testing to meet the traffic profiles listed therein. This provides enough routes to stress the vPE VNF instance, which in turn stresses the OPNFV platform.

- The logical topology of CE/PE devices are emulated using IXIA device with two ports allocated for one instance of this topology. The tests performed creates multiple instances of this topology for reliability testing and therefore the test requires 8 to 16 IXIA ports.

| Entity | V4 Address | V6 Address | VLAN | AS Number | RD | VRF Name | RT | Advt Prefixes | Members |

|---|---|---|---|---|---|---|---|---|---|

| CE-1-1 | 15.1.1.1 | 101 | 101 | 65001 | vrf-CE-1-1 | 65001:101 | 14.1.1.0/24 thru 14.1.254.0/25 | CE-1-2, CE-1-3, CE-1-4 | |

| CE-1-2 | 15.1.2.1 | 102 | 102 | 65002 | vrf-CE-1-2 | 65002:102 | 14.2.1.0/24 thru 14.2.254.0/25 | CE-1-1, CE-1-3, CE-1-4 | |

| CE-1-3 | 15.1.3.1 | 103 | 103 | 65003 | vrf-CE-1-3 | 65003:103 | 14.3.1.0/24 thru 14.3.254.0/25 | CE-1-1, CE-1-2, CE-1-4 | |

| CE-1-4 | 15.1.4.1 | 104 | 104 | 65004 | vrf-CE-1-4 | 65004:104 | 14.4.1.0/24 thru 14.4.254.0/25 | CE-1-1, CE-1-2, CE-1-3 |

TODO:Complete the above table after review

Single Instance vPE deployment configuration

The following table provides the deployment configuration for the basic vPE topology test case. This topology is exercised multiple times during functional testing for studying the robustness of the OPNFV platform. Therefore, the numbers specified in the following table are scaled as per the number of instances instantiated.

| Element | Minimum Configuration | Medium Configuration | Large Configuration |

|---|---|---|---|

| VCPU | 1 | 4 | 8 |

| Memory | 2G | 4G | 8G |

| vNIC Data | 2x10G, 2x40G | 2x10G, 2x40G | 2x10G, 2x40G |

| vNIC Mgmt. | 1x1G | 1x1G | 1x1G |

| vNIC Control | 1x1G | 1x1G | 1x1G |

| Serial Console | 1 | 1 | 1 |

| Disk | 2x4G | 2x8G | 2x16G |

Advanced vPE Topology

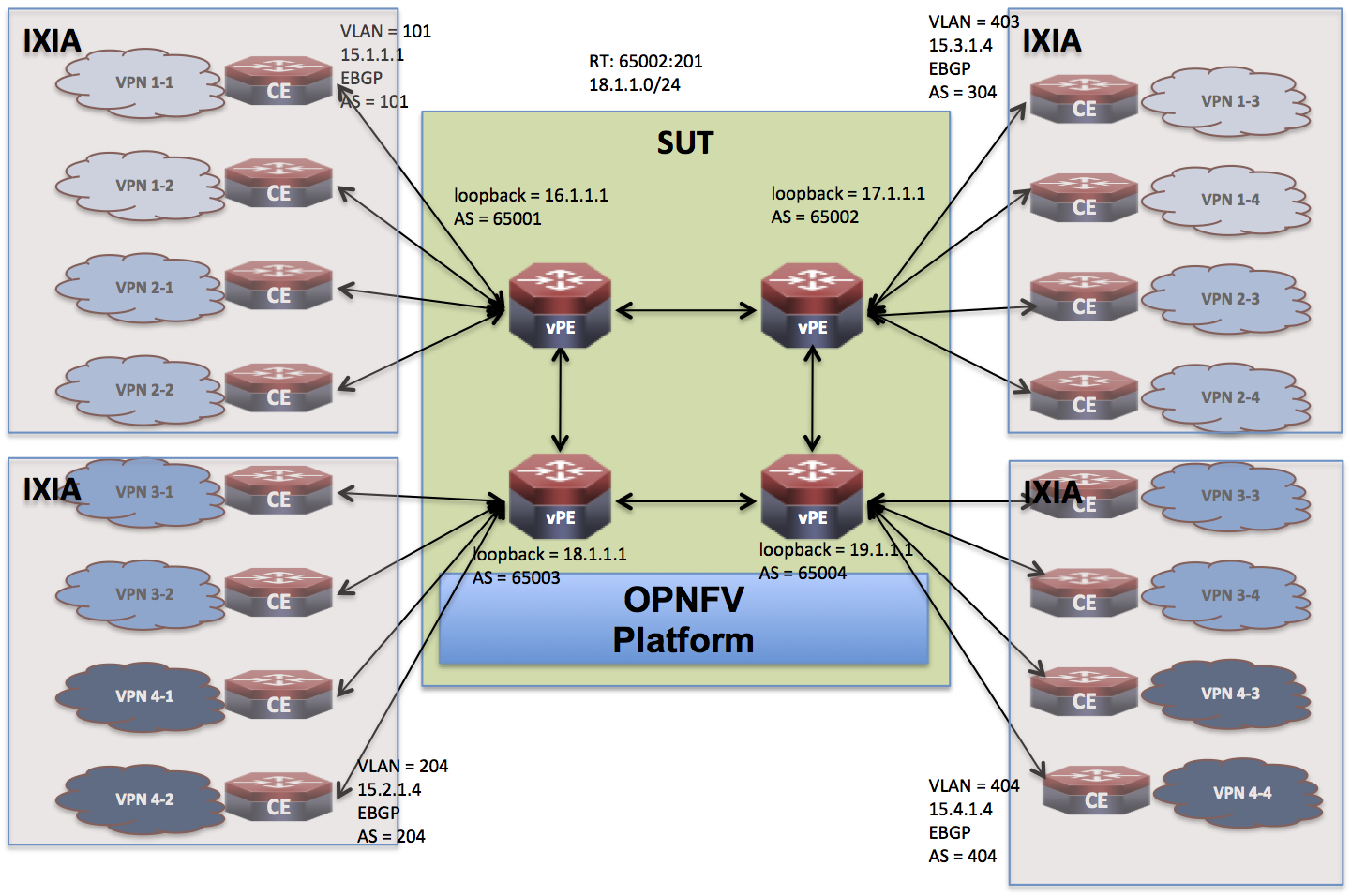

The following figure provides the advanced topology for the vPE use case functional and performance testing.

The following are the salient aspects of the above topology:

The following are the salient aspects of the above topology:

- The topology consists of four vPE VNFs that are instantiated on the SUT. The test cases identified later specifies how the four instances are deployed. For example, one the same physical server or spread across 4 servers.

- The CE devices (emulated) are distributed across the four instances.

- The focus of this topology is to study the behavior of the OPNFV platform under multiple VNF deployments.

TODO: Add a table like the basic test case here after review

Performance Tuning Characteristics

The following table provides the performance tuning characteristics. Some of these characteristics are varied in the test cases to study the behavior of the OPNFV platform. This table requires a in depth review and ongoing updates to select the best performance tuning characteristics.

| Entity | Performance Tuning Characteristics |

|---|---|

| Host CPU | Sandy bridge, host CPU, sockets N, cores 1, threads 1, vCPU and IO Thread pinning, Automatic NUMA balancing, APICv, EOI Acceleration. |

| Memory | Balloon 50 %, hard_limit, soft_limit, swap_hard_limit, huge_pages |

| vNIC | Passthru, SR-IOV, vhost-net, multi-queue virtio-net, arp filter, MTU Size, Bridge zero copy transmit |

| Queue sizes | Rx/Tx Queue sizes 32K |

| disk | virtio scsi |

High Level Software Architecture

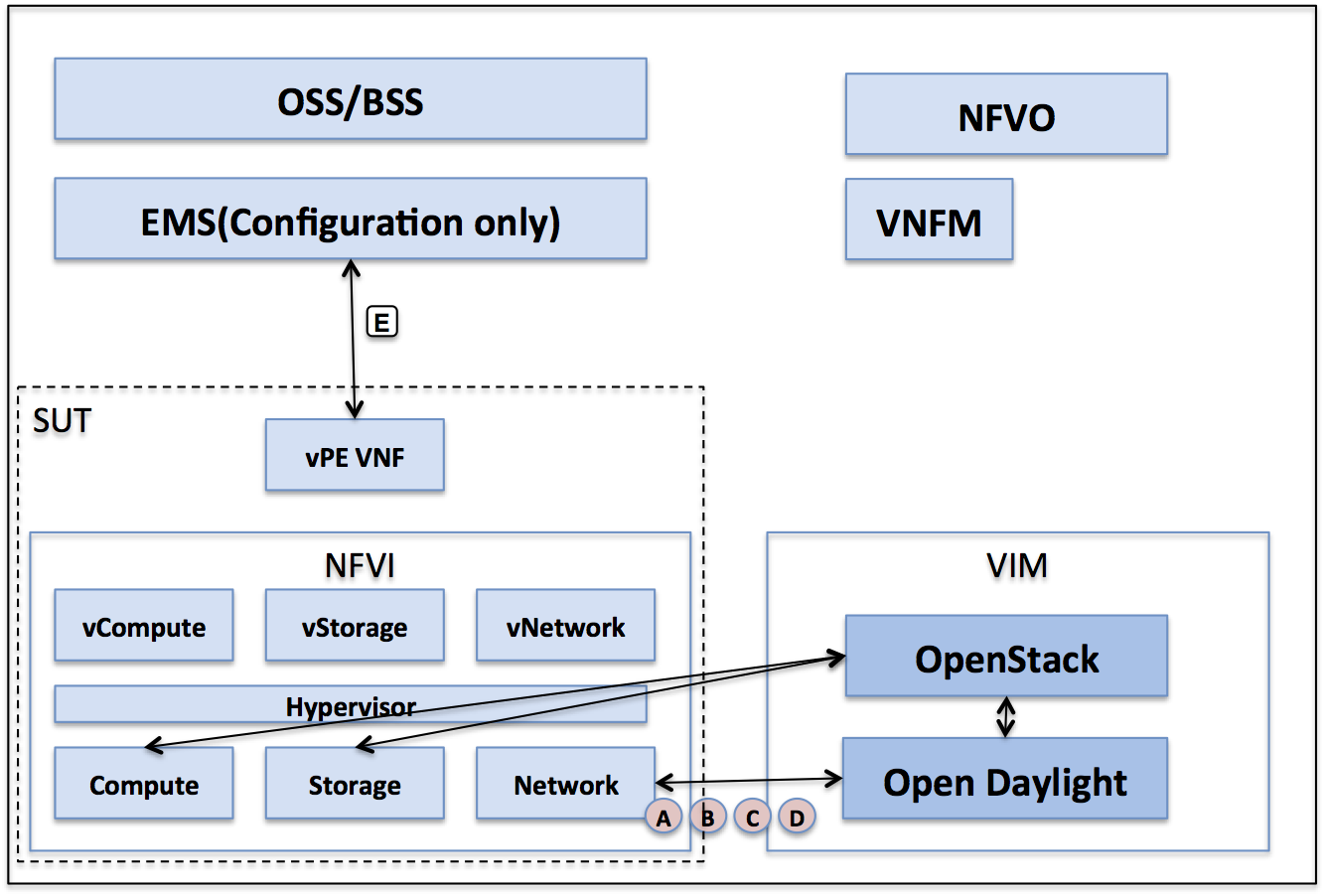

The following figure is the proposed architecture for the vPE functional and performance testing use cases.

The following are the key aspects of the above architecture:

- The architecture is based on the ETSI NFV ISG functional blocks without the reference points.

- The VIM is decomposed into two major blocks, Openstack and Open Daylight Controller (ODL)

- The ODL controller is driven by the Openstack Neutron component. As shown, in the figure the neutron plugin and its agent communicate with the ODL controller and do not perform any interaction with the compute nodes.

- The ODL controller is responsible for setting up the compute nodes, programming the OVS switches or any other relevant element. This is indicated using the reference points A,B,C,D.

- The VNF instantiated on the NFVI is the vPE VNF, obviously, any other VNF can also be adopted into this architecture. The VNF is configured using a configuration component that is traditionally part of the EMS.

Hardware Requirements

The following table provides the hardware elements required for the project.

| Element | Configuration |

|---|---|

| Servers | 1 (controller), 3 (Computes) |

| Switch | 1 Management Switch 48x1G Ports, 1 Control Switch 48x1G Ports, 1 Data switch 48 Ports mix of 10G, 40G |

| IXIA | 32 Port IXIA controller with 10, 40G ports |

== TODO: Add more details on the server characteristics, Switches, Ports etc.

Software components and Requirements

TODO

Generic Functional Testing

The following table provides a list of high level functional test cases. We need to agree on the columns used and if we need to add any more columns. The following defines the column headers.

- Test Id: Identifier for the Test and starts with a letter 'F' for functional and 'P' for performance. Contains a 3 digit test suite identifier followed by a 3 digit sub test suite or test case identifier. In case of sub test suite, a further 3 digit number identifies the test case.

- Title: A brief one liner for the test suite or test case

- Type: Suite or Test case (Redundant); however, the Test Id may appear independently in threads, docs etc.

- Description/Procedure: In case of test suite, description provides the suite purpose, in case of Test case, the procedure explains clearly what needs to performed. If required use a link to document the exact steps.

- Pass/Fail: The criterion for detecting a pass or a fail. Documenting both pass conditions and failure conditions must be listed.

- Target Release: The OPNFV Release being targeted.

- Automation Required: Defines if automation is a must or not. If Automation is a must have required then providing a pointer to a wiki that automates this will be useful.

| Test Id | Title | Type | Description/Procedure | Pass/Fail Criteria | Target Release | Automation Required |

|---|---|---|---|---|---|---|

| F-001 | OPNFV Platform Cold System Install | Suite | A comprehensive test suite that covers all aspects of system installation to ensure that all components of the system is installed, configured and functioning properly. | N/A | Yes | |

| F-002 | OPNFV Platform Basic NFV Functions | Suite | A test suite to cover basic NFVI functionality testing like service Orchestration, basic life cycle management and basic network functions (layer 2, layer 4 connectivity), addressing (v4/v6). Includes tenant workloads that can consume the NFV services. Includes protocol inter operability Multi-Tenancy. | N/A | Yes | |

| F-003 | OPNFV Platform PNF Connectivity | Suite | Test suite to test a VNF connectivity to PNF. Covers a wide ranges of VNF to PNF connections. | N/A | Yes | |

| F-004 | OPNFV Platform Basic Service Chaining | Suite | Test suite for testing service chains. Identifies a comprehensive suite of service chains. | N/A | Yes | |

| F-005 | OPNFV Platform, NFV Complete Life Cycle Management | Suite | Test suite to cover elastic scaling of the VNFs based on demand, HA, Faults handling, Service Upgrade etc. Resource allocation and management | N/A | Yes | |

| F-006 | OPNFV Platform Fault Management | Suite | Test Suite for covering all aspect of Fault monitoring, management, service assurance, service Resiliency etc. | N/A | No | |

| F-007 | OPNFV Platform High Availability | Suite | High Availability related tests. Consider combining with above and call it FM and HA. | N/A | No |

vPE Functional Testing

| Test Id | Title | Type | Description/Procedure | Pass/Fail Criteria | Target Release | Automation Required |

|---|---|---|---|---|---|---|

| F-501 | Use Case: vPE Deployment | Suite | A test suite that covers all aspects of a virtual provider edge functionality testing. This test suite covers a generic set of vPE functionality like using different routing protocols with the CE devices, mix of P and PE routers, different address families, features like QOS, ACL, etc. | N/A | R1.0 | No |

vPE Performance Testing

The vPE performance testing and measurement covers the following aspects of a vPE VNF deployment in an environment that simulates real traffic profiles:

- Topology

- Basic Traffic profiles

- Advanced Traffic Profiles

Topology

The topology required for the performance measurement is specified above.

Basic Traffic profiles

Advanced Traffic profiles

The following table provides the test suites for the vPE performance testing.

| Test Id | Title | Type | Description/Procedure | Pass/Fail Criteria | Target Release | Automation Required |

|---|---|---|---|---|---|---|

| P-001 | vPE Control Plane Performance | Suite | The goal of this test suite is to study and measure the performance of the OPNFV platform components during vPE control plane setup and tear down that results from adding and deleting VPN sites, injecting/withdrawing routes, setting up traffic QOS policies, enabling/disabling BFD, interface flaps, triggering large route downloads etc. | N/A | R1.0 | No |

| P-002 | vPE Data plane performance | Suite | The goal of this test suite is to study and measure the performance of the OPNFV platform components during data forwarding under different load conditions of CEs, VPN sites. Each VPN site is designed to inject traffic that is specified in the following tables. In addition, the flows are subject to QOS treatment in a specified ratio for RT/AF/BE flows. In addition, a percentage of sites have BFD enabled at different polling intervals. Most of the traffic characteristic parameters like BFD period, traffic rate for RT, AF, BE flows, etc., are varied. | N/A | R1.0 | No |

| P-003 | vPE under control and data plane stress | Suite | This test suite combines the above two test suites in different proportions to study and measure the performance of the OPNFV platform under steady state data flow with control plane changes. | N/A | R1.0 | No |