Step 4: Creating Networks and Subnets

OPNFV POC OpenStack Setup Instructions.

Steps to be executed on the snsj113 (IP: 198.59.156.113) machine (refer to the details of lab environment).

1. Login as odl user and source the credentials.

cd ~/devstack odl@opnfv-openstack-ubuntu:~/devstack$ source openrc admin demo

2. Clone the opnfv_os_ipv6_poc repo.

git clone https://github.com/sridhargaddam/opnfv_os_ipv6_poc.git /opt/stack/opnfv_os_ipv6_poc

3. We want to manually create networks/subnets that will help us to achieve the POC, so we have used the flag, NEUTRON_CREATE_INITIAL_NETWORKS=False in local.conf file. When this flag is set to False, devstack does not create any networks/subnets during the setup phase.

4. On snsj113 machine, eth1 provides external/public connectivity (both IPv4 and IPv6). So let us add this interface to br-ex and move the IP address (including the default route) from eth1 to br-ex.

Note: This can be automated in /etc/network/interfaces.

sudo ip addr del 198.59.156.113/24 dev eth1 && sudo ovs-vsctl add-port br-ex eth1 && sudo ifconfig eth1 up && sudo ip addr add 198.59.156.113/24 dev br-ex && sudo ifconfig br-ex up && sudo ip route add default via 198.59.156.1 dev br-ex

# Verify that br-ex now has the IPaddress 198.59.156.113/24 and the default route is on br-ex

odl@opnfv-openstack-ubuntu:~/devstack$ ip a s br-ex

38: br-ex: <BROADCAST,UP,LOWER_UP> mtu 1430 qdisc noqueue state UNKNOWN group default

link/ether 00:50:56:82:42:d1 brd ff:ff:ff:ff:ff:ff

inet 198.59.156.113/24 brd 198.59.156.255 scope global br-ex

valid_lft forever preferred_lft forever

inet6 fe80::543e:28ff:fe70:4426/64 scope link

valid_lft forever preferred_lft forever

odl@opnfv-openstack-ubuntu:~/devstack$

odl@opnfv-openstack-ubuntu:~/devstack$ ip route

default via 198.59.156.1 dev br-ex

10.134.156.0/24 dev eth0 proto kernel scope link src 10.134.156.113

192.168.122.0/24 dev virbr0 proto kernel scope link src 192.168.122.1

198.59.156.0/24 dev br-ex proto kernel scope link src 198.59.156.113

odl@opnfv-openstack-ubuntu:~/devstack$

4. Create a Neutron router which provides external connectivity.

neutron router-create ipv4-router

# Create an external network/subnet using the appropriate values based on the data-center physical network setup.

neutron net-create --router:external ext-net neutron subnet-create --disable-dhcp --allocation-pool start=198.59.156.251,end=198.59.156.254 --gateway 198.59.156.1 ext-net 198.59.156.0/24

# Associate the ext-net to the neutron router.

neutron router-gateway-set ipv4-router ext-net

# Create an internal/tenant ipv4 network.

neutron net-create ipv4-int-network1

# Create an IPv4 subnet in the internal network.

neutron subnet-create --name ipv4-int-subnet1 --dns-nameserver 8.8.8.8 ipv4-int-network1 20.0.0.0/24

# Associate the ipv4 internal subnet to a neutron router.

neutron router-interface-add ipv4-router ipv4-int-subnet1

# Create a second neutron router where we want to "manually spawn a radvd daemon" to simulate external IPv6 router.

neutron router-create ipv6-router

# Associate the ext-net to the ipv6-router.

neutron router-gateway-set ipv6-router ext-net

# Create a second internal/tenant ipv4 network.

neutron net-create ipv4-int-network2

# Create an IPv4 subnet for the ipv6-router internal network.

neutron subnet-create --name ipv4-int-subnet2 --dns-nameserver 8.8.8.8 ipv4-int-network2 10.0.0.0/24

# Associate the ipv4 internal subnet2 to ipv6-router.

neutron router-interface-add ipv6-router ipv4-int-subnet2

5. Download fedora22 image which would be used as vRouter.

glance image-create --name 'Fedora22' --disk-format qcow2 --container-format bare --is-public true --copy-from https://download.fedoraproject.org/pub/fedora/linux/releases/22/Cloud/x86_64/Images/Fedora-Cloud-Base-22-20150521.x86_64.qcow2

6. Create a keypair.

nova keypair-add vRouterKey > ~/vRouterKey

7. Create some Neutron ports that would be used for vRouter and the two VMs.

neutron port-create --name eth0-vRouter --mac-address fa:16:3e:11:11:11 ipv4-int-network2

neutron port-create --name eth1-vRouter --mac-address fa:16:3e:22:22:22 ipv4-int-network1

neutron port-create --name eth0-VM1 --mac-address fa:16:3e:33:33:33 ipv4-int-network1

neutron port-create --name eth0-VM2 --mac-address fa:16:3e:44:44:44 ipv4-int-network1

8. Boot the vRouter using Fedora22 image on the Compute node (hostname: opnfv-odl-ubuntu)

nova boot --image Fedora22 --flavor m1.small --user-data /opt/stack/opnfv_os_ipv6_poc/metadata.txt --availability-zone nova:opnfv-odl-ubuntu --nic port-id=$(neutron port-list | grep -w eth0-vRouter | awk '{print $2}') --nic port-id=$(neutron port-list | grep -w eth1-vRouter | awk '{print $2}') --key-name vRouterKey vRouter

9. Verify that vRouter boots up successfully and the ssh keys are properly injected.

Note: It may take few minutes for the necessary packages to get installed and ssh keys to be injected.

nova list nova console-log vRouter

# Sample output:

[ 762.884523] cloud-init[871]: ec2: ############################################################# [ 762.909634] cloud-init[871]: ec2: -----BEGIN SSH HOST KEY FINGERPRINTS----- [ 762.931626] cloud-init[871]: ec2: 2048 e3:dc:3d:4a:bc:b6:b0:77:75:a1:70:a3:d0:2a:47:a9 (RSA) [ 762.957380] cloud-init[871]: ec2: -----END SSH HOST KEY FINGERPRINTS----- [ 762.979554] cloud-init[871]: ec2: #############################################################

10. In order to verify that the setup is working, let's create two cirros VMs on the ipv4-int-network1 (i.e., vRouter eth1 interface - internal network).

Note: VM1 is created on Control+Network node (i.e., opnfv-openstack-ubuntu)

nova boot --image cirros-0.3.4-x86_64-uec --flavor m1.tiny --nic port-id=$(neutron port-list | grep -w eth0-VM1 | awk '{print $2}') --availability-zone nova:opnfv-openstack-ubuntu --key-name vRouterKey --user-data /opt/stack/opnfv_os_ipv6_poc/set_mtu.sh VM1

Note: VM2 is created on Compute node (i.e., opnfv-odl-ubuntu). We will have to configure appropriate mtu on the VM iface by taking into account the tunneling overhead and any physical switch requirements. If so, push the mtu to the VM either using dhcp options or via meta-data.

nova boot --image cirros-0.3.4-x86_64-uec --flavor m1.tiny --nic port-id=$(neutron port-list | grep -w eth0-VM2 | awk '{print $2}') --availability-zone nova:opnfv-odl-ubuntu --key-name vRouterKey --user-data /opt/stack/opnfv_os_ipv6_poc/set_mtu.sh VM2

11. Confirm that both the VMs are successfully booted.

nova list nova console-log VM1 nova console-log VM2

12. Lets manually spawn a radvd daemon inside ipv6-router namespace (to simulate external router). For this, we will have to identify the ipv6-router namespace and move to the namespace.

sudo ip netns exec qrouter-$(neutron router-list | grep -w ipv6-router | awk '{print $2}') bash

# On successful execution of the above command, you will be in the router namespace.

# Configure the IPv6 address on the <qr-xxx> iface.

export router_interface=$(ip a s | grep -w "global qr-*" | awk '{print $7}')

ip -6 addr add 2001:db8:0:1::1 dev $router_interface

# Update the sample radvd.conf file with the $router_interface and spawn radvd daemon inside the namespace to simulate an external IPv6 router.

cp /opt/stack/opnfv_os_ipv6_poc/scenario2/radvd.conf /tmp/radvd.$router_interface.conf sed -i 's/$router_interface/'$router_interface'/g' /tmp/radvd.$router_interface.conf $radvd -C /tmp/radvd.$router_interface.conf -p /tmp/br-ex.pid.radvd -m syslog

Configure the downstream route pointing to the eth0 iface of vRouter.

ip -6 route add 2001:db8:0:2::/64 via 2001:db8:0:1:f816:3eff:fe11:1111

Note: The routing table should now look something similar to shown below. ip -6 route show 2001:db8:0:1::1 dev qr-42968b9e-62 proto kernel metric 256 2001:db8:0:1::/64 dev qr-42968b9e-62 proto kernel metric 256 expires 86384sec 2001:db8:0:2::/64 via 2001:db8:0:1:f816:3eff:fe11:1111 dev qr-42968b9e-62 proto ra metric 1024 expires 29sec fe80::/64 dev qg-3736e0c7-7c proto kernel metric 256 fe80::/64 dev qr-42968b9e-62 proto kernel metric 256

Now, let us ssh to one of the VMs (say VM1) to confirm that it has successfully configured the IPv6 address using SLAAC with prefix from vRouter.

Note: Get the IPv4 address associated to VM1. This can be inferred from nova list command.

ssh -i /home/odl/vRouterKey cirros@<VM1-ipv4-address>

If everything goes well, ssh will be successful and you will be logged into VM1. Run some commands to verify that IPv6 addresses are configured on eth0 interface.

ip address show

The above command should display an IPv6 address with a prefix of 2001:db8:0:2::/64. Now let us ping some external ipv6 address.

ping6 2001:db8:0:1::1

If the above ping6 command succeeds, it implies that vRouter was able to successfully forward the IPv6 traffic to reach external ipv6-router.

You can now exit the ipv6-router namespace.

exit

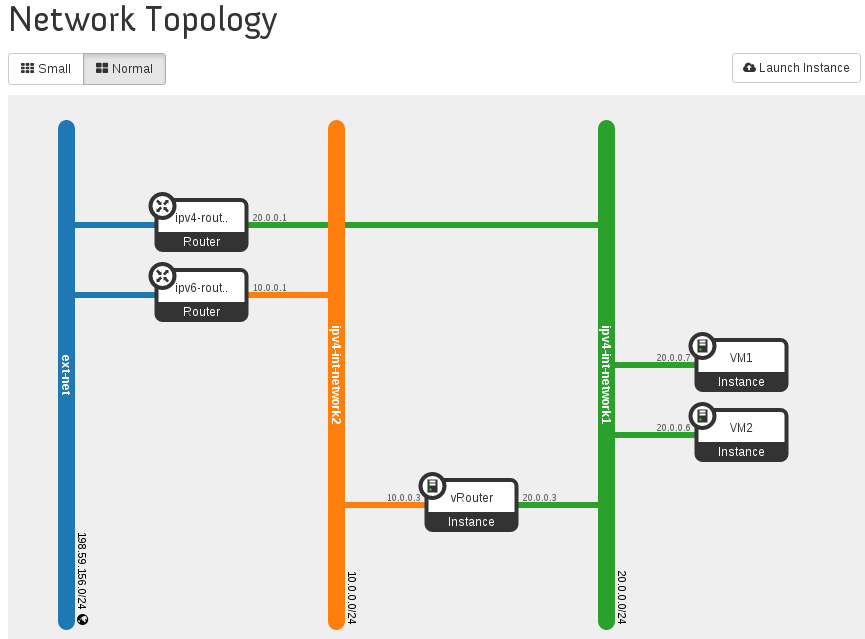

The Network Topology of this setup would be as follows.

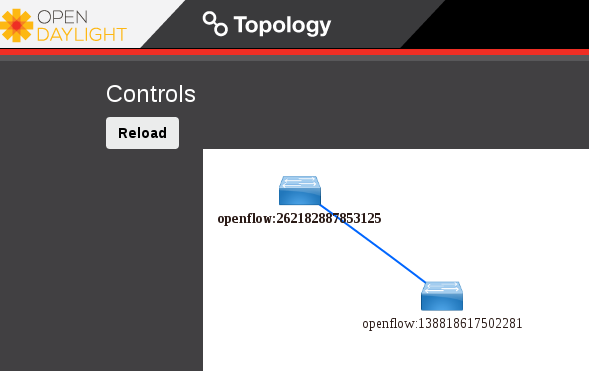

ODL DLUX UI would display the two nodes as shown below.