This is an old revision of the document!

Spirent Virtual Cloud Test Lab

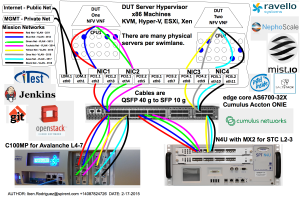

A community provided metal resource hosted at Nephoscale, leveraged for SDN/NFV public testing and OpenDaylight, OpenStack and OPNFV projects.

- Lab name: Spirent VCT Lab

- Lab location: USA West and East availability zones

- Who to contact: dl-vct@spirent.com (Iben Rodriguez)

- Any main focus areas: Network Testing and Measurement - SDN and NFV Technology

- Any other details that should be shared to help increase involvement: This lab is used to develop the low level test scripts used to identify functional and performance limits of network devices.

We have various hardware and software in the lab linked together with 40 Gigabit Ethernet. There are mostly whitebox x86 servers with ARM cpus in the pipeline.

As much as possible all activities should be scripted via jenkins jobs using: https://jenkins.vctlab.com:9443/

Spirent VCT Lab is currently working on 3 different OpenStack environments each one of them deployed on different *physical* hardware configuration:

- OpenStack Juno (Ubuntu 14.04.1 LTS, 10 cores, 64GB RAM, 1TB SATA, 40 Gbps) - snsj53 ←- used for 40 gbps SR-IOV performance testing

- OpenStack Kilo (CentOS 7.1, 10 cores, 64GB RAM, 1TB SATA, 40 Gbps) - snsj54 ←- shared between OPNFV and 40 gbps SR-IOV performance testing

- VMware ESXi 6.0 (CentOS 6.5, 10 Cores, 64GB RAM, 1TB SATA, 10 Gbps) - snsj67

- OpenStack Icehouse – 2014.1.3 release (CentOS 6.5, 20 Cores, 64GB RAM, 1TB SATA, 10 Gbps) - snsj69

- Microsoft Windows 2012 R2 Hyper-V (10 Cores, 64GB RAM, 1TB SATA, 10 Gbps) - snsj76

There are a number of different networks referenced in the VPTC Design Blueprint.

- Public Internet – 1 g

- Private Management – 1g

- Mission Clients – 40/10g

- Mission Servers – 40/10g

- These can be added or removed as specified by the test methodology.

- There are 8 x 10 gige SFP+ ports available on a typical C100MP used for Avalanche Layer 4-7 testing.

- The Spirent N4U chassis offers 2 x 40 gige QSFP+ ports with the MX-2 Spirent Test Center Layer 2-3 testing.

- There are 2 x Cumulus switches with 32 ports of 40 gige QSFP+ ports for a total capacity of 256 ports of 10 gige.

- We use QSFP+ to SFP+ break out cables to convert a single 40 gige port into 4 x 10 gige ports.

- Together these offer a flexible solution to allow up to 8 simultaneous tests to take place with physical traffic generators at the same time.

Assuming a 10 to 1 oversubscription ratio we could handle 80 customers with the current environment.

For example:

- An 80 gbps test would need 4 port pairs of 10 gige each and require 8 mission networks.

- Multiple clients sharing common test hardware might have dedicated management networks for their DUTs yet communicate with the APIs and Management services via a shared DMZ network protected by a firewall.

- SSL and IPSec VPN will typically be leveraged to connect networks across the untrusted Internet or other third party networks.

- Stand-alone DUT servers using STCv and AVv traffic generators could easily scale to hundreds of servers as needed.